Generative AI: Where Does Value Accrue and Where is Defensibility?

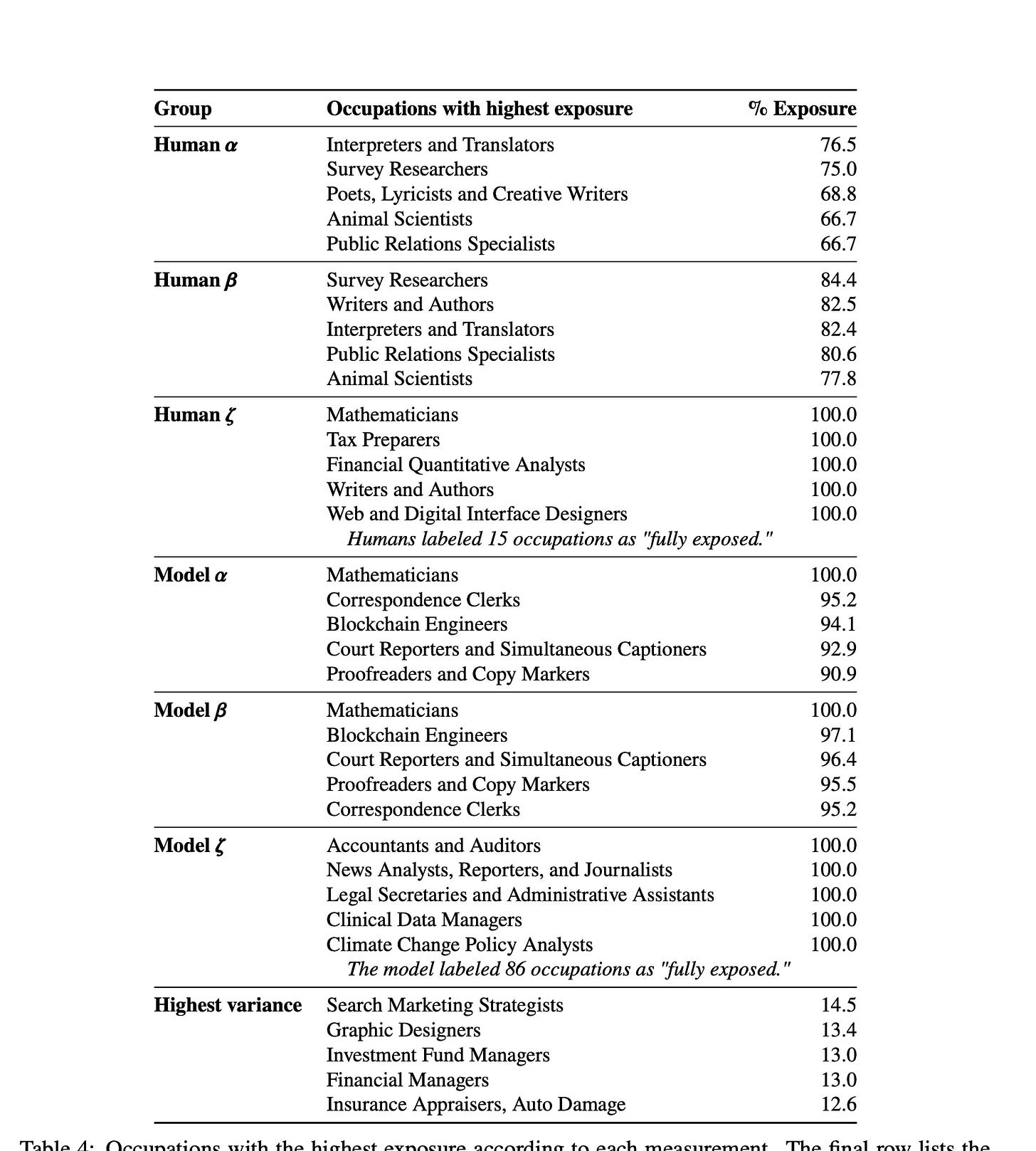

Generative AI has taken the world by storm and is completely reinventing every facet of knowledge work. Through this transformation, the prevailing wisdom was that technological advances would start with automating blue-collar jobs and then move on to white-collar jobs. However, with the advent of large foundation models across a multitude of modalities, we’re seeing the antithesis of this line of thinking.

Thus, it’s more critical than ever before to builders, investors and innovators the like to delve deeper into AI and understand the implications, ramifications and how best to leverage the technology to drive value. In the paradigm shift demarcated by this tidal wave of AI, two questions are critical to assess: where do we think value will accrue to in the ecosystem and ultimately where is there defensibility?

Where Does Value Accrue in Generative AI?

Out of a scorecard of 100, I think the following will be true:

Surfaces Where Knowledge Work Ensues With Strong Distribution & Data: 50%

There’s the constant friction between the two sides of the equation: is this an enabling technology, or a fundamental platform shift? The answer is both, but on a spectrum, it leans more towards an enabling technology.

Often folks will liken what’s happening with AI as the “Blockbuster” moment for the incumbent tech giants, but in actuality, it’s more analogous to when companies like Facebook leveraged the shift to mobile that underpinned hundreds of billions of dollars of growth

Mobile was Facebook’s biggest growth lever and magnified their user and business value. In a similar vein, generative AI will breed enormous value for a plethora of incumbent tech companies with (i) massive brand presence, (ii) enormous distribution, (iii) deep data flywheels that can give curated experiences (iv) capturing every pain point that exists in a users workflow from start to finish

We’ve already started to see this happen in strides:

Intercom building AI first chatbots and disrupting AI chat companies like Quickchat

Duolingo adding Max , disrupting companies like Quazel

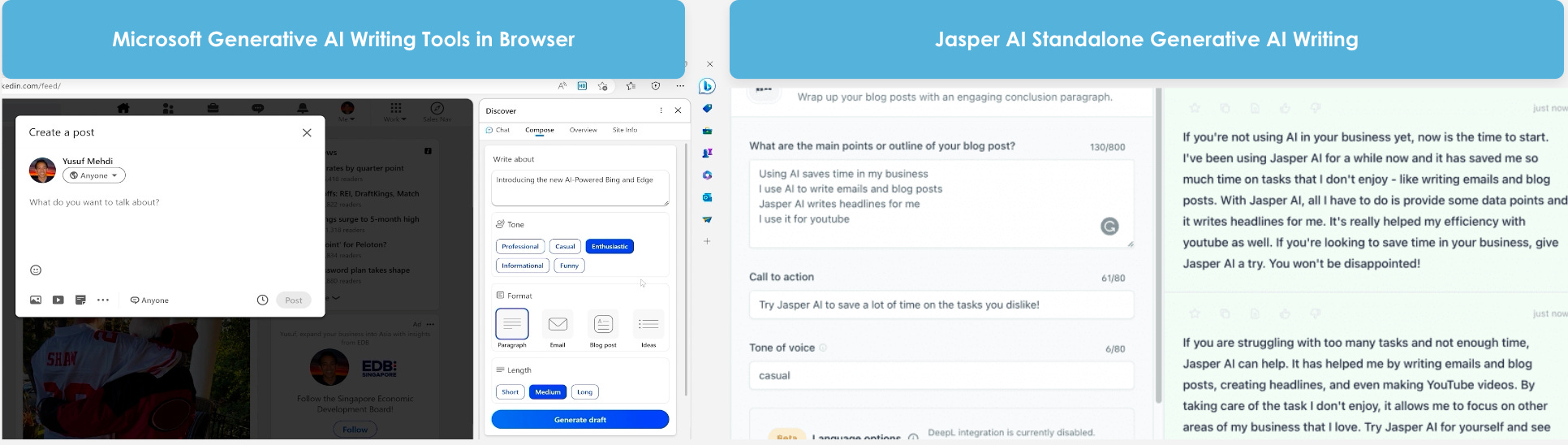

Microsoft embedding AI into their product suite, disrupting the likes of Jasper, Grammarly

Google embedding generative AI into their workspaces disrupting Tome, etc.

In each of the cases where there seemed to be an AI-first disruption, the incumbents have been able to come out of the woodwork with a parallel feature-set that was more deeply embedded and entrenched in the workflows of users. The question one must ask here is how does one differentiate in a hyper-competitive space with incumbents embedding AI features into their ecosystems?

That leads us to the 20% below.

Startups Building Application Layers with an AI-First Lens: 20%

There are a plethora of startups built from the ground up with an AI first lens that will be able to encroach on the incumbents or build in new blue oceans. These companies have, embedded in them, one or many of the key defensibility factors characterized in the section below. Particularly important are (i) deep verticalization into a segments workflow (ii) creating new experiences previously impossible with an AI first lens, (iii) strong GTM capabilities

Some examples that come to mind are:

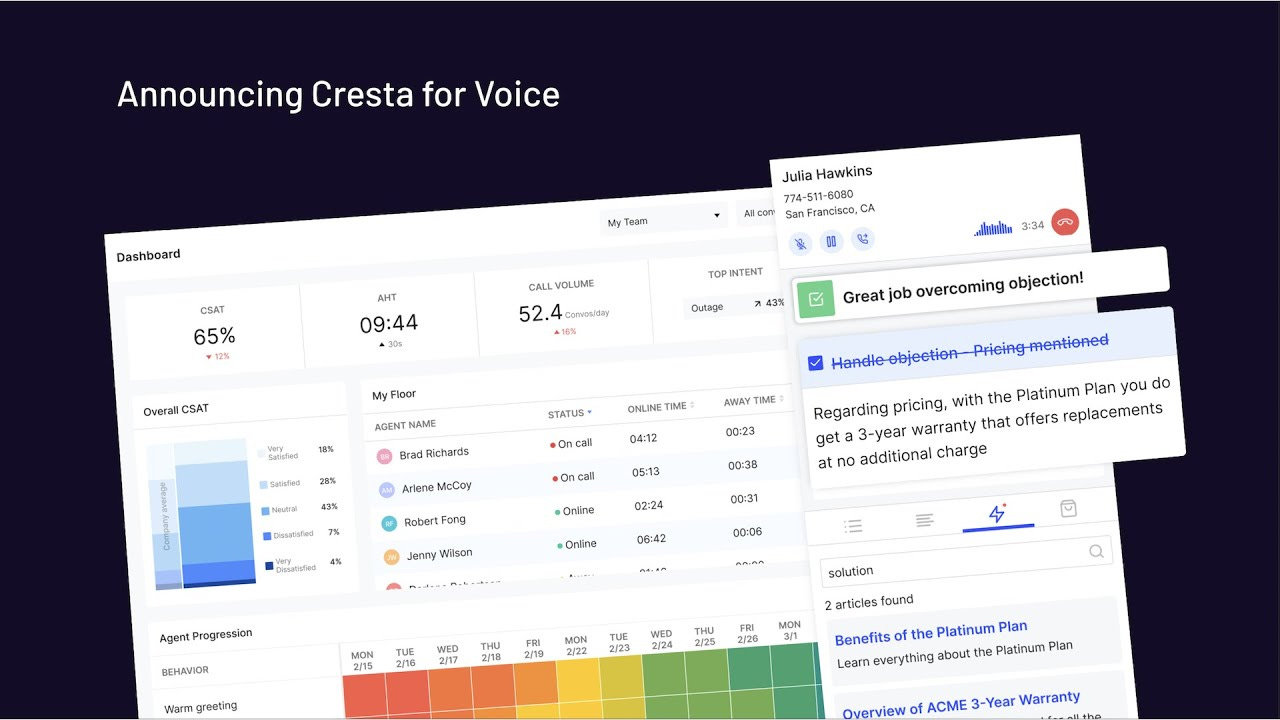

Cresta AI — Cresta is an ML-enabled suite of tools that contextually provides automated feedback, suggestions and guidance to sales teams to optimize their success at selling.

While Zoom, Otter, etc may build similar features, the way in which (i) Cresta has verticalized around call-centers with ICP-specific features, (ii) built data-driven flywheels compounding the accuracy of their suggestions, and (iii) strong B2B presence will continue to compound value for them. Of course, there is the dreary question of how much of sales/call centers can be automated with AI but the full scope of that still remains to be seen in the foreseeable future.

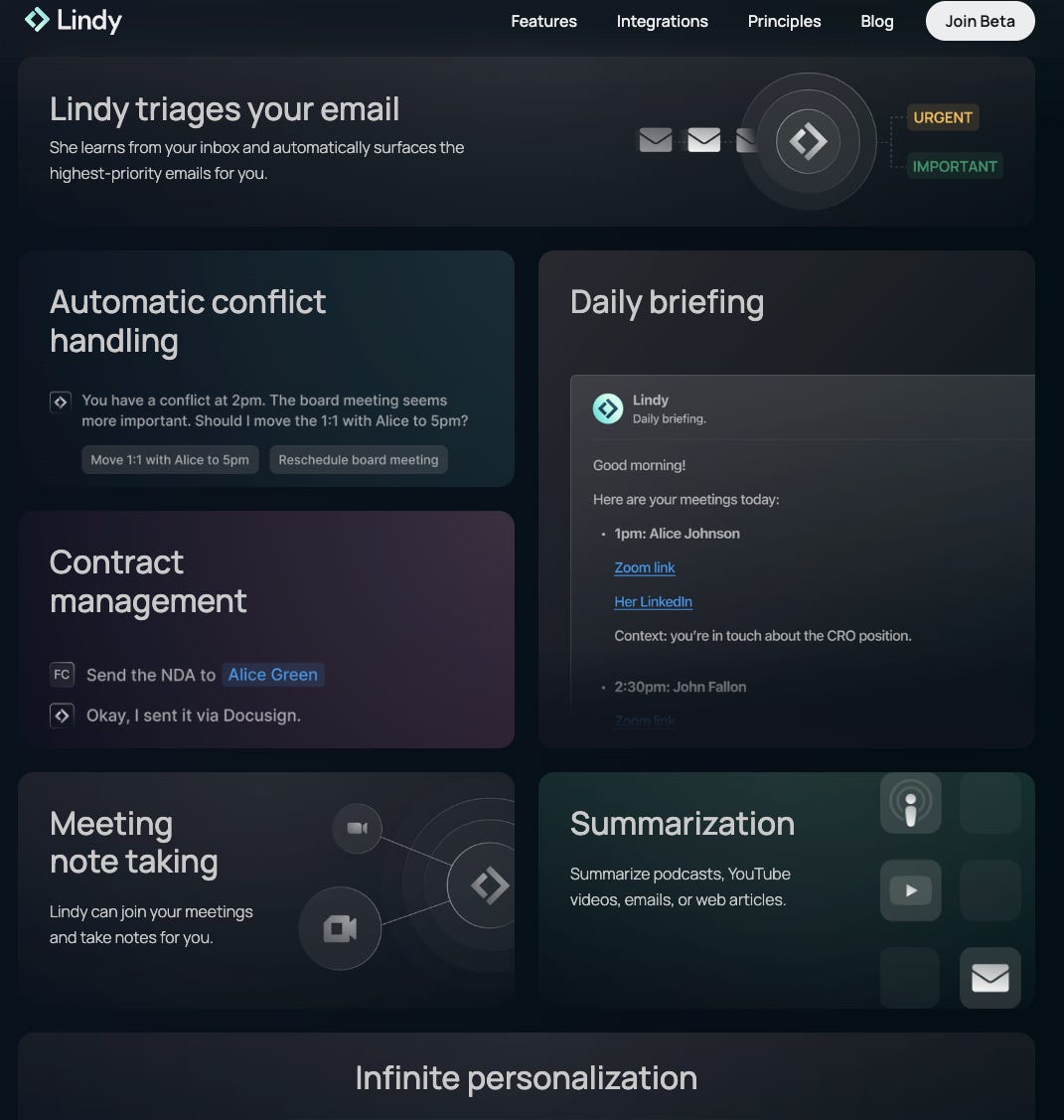

Lindy AI — The idea of a personal assistant for your life that’s automated has been in vogue for decades. While ChatGPT can perform a small subset of tasks, it doesn’t automatically connect in a ubiquitous way to every value center that exists in your life (although it’s soon coming). A system that automatically understands who you are, all your personal information, the context of your calendar, CRM, email, meetings, and messages, and can autoschedule, auto summarize, auto draft, auto-notetake, auto-triage email, auto-respond and autocomplete tasks for you, is something that is uniquely possible for the first time with this wave of foundation models. Again, the data flywheels of uniquely understanding your email behavior, your calendar behavior, your task lists is hugely valuable and uniquely differentiating in building a tool like this.

Character AI — This is a tool that enables anyone to “chat with” any luminary figure, everyone from the former presidents, Elon Musk, or Harry Potter. While the magnitude of revenue created over time is debatable, this is emblematic of building with an AI-first lens. This is a product that could not have existed in the pre-generative AI world and is a new mechanism through which people can choose to have an AI-first expert or figure to converse with.

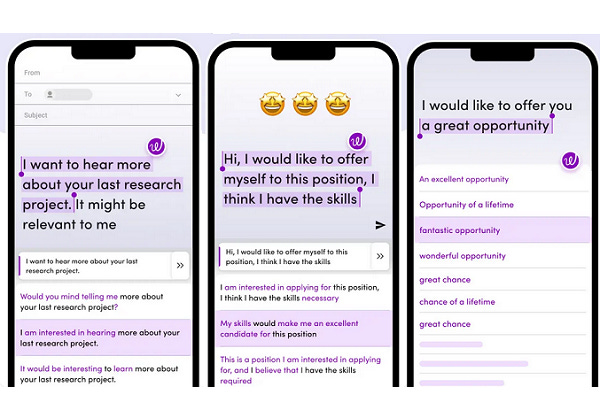

WordTune — While the competitive set is getting stronger in the core space, it’s worth noting the productization around the keyboard. WordTune has built a uniquely vertical experience for mobile. While there are a multitude of platforms that compete with the core value proposition here (including ChatGPT) , building for the surface of mobile in the context of the keyboard gives them a unique advantage to play where only a few companies will choose to. Further, there’s enormous switching costs to move from one keyboard to another once users have adopted. First mover advantages and strong, curated UI/UX layers built for specific surfaces are interesting levers of differentiation. This is theoretically much more efficient than a user using another app to output text, copy it and paste it in a new window

Descript — Descript is one of the most powerful podcasting tools — with the marquee feature of live-editing, injecting, deleting words, phrases etc. in the context of your podcast. While this is a tool whose underlying tech can be replicated, they have (i) a first mover advantage in getting to market first (ii) built an AI-first featureset and product unlike incumbents and (iii) have fundamentally rethought the workflow of users when creating and editing podcasts seamlessly.

Rewind — Rewind and similar apps enable you to search the entire corpus of your digital footprint. While there have been a few companies that have tried to build a search layer on top of all the information that you’ve ever interacted with, the advent of embeddings means that you can search, source for anything in natural language. One could imagine going to the next leg and assisting you to create documents, emails, presentations, messages, announcements using your unique voice, context and your digital footprint. It remains to be seen what enterprise value is created here, but this is an interesting AI-first lens that is differentiated.

Infrastructure, Tooling and Orchestration Layers: 30%

Infrastructure layers like foundation models across modalities, orchestration layers, some MLOps platforms, and hardware giants (e.g. NVIDIA) will all win. Open AI, Anthropic, Google, and the like will become the AWS, Azure, and GCP of this space.

There are a series of questions one must ask around the fringes of the infrastructure space:

What are the MLOps that need to exist on top of these tools that will provide asymmetric value?

But more importantly, which of these tools will still be around when foundation model companies grow?

How do we avoid companies building on-top of Open AI being disrupted by the next iteration of ChatGPT? How do we ensure they don’t face the fate of tens of startups that failed because AWS emulated their featuresets or Apple sherlocking companies

Do tools that help chain across models matter as the foundation models become multimodal and much larger in size?

Irrespective, this segment will continue to harbor enormous value as generative AI becomes more ubiquitous and widespread. Significant value will likely accrue to the oligopoly of foundation models that exist in the space.

Where Is There Defensibility In The Application Layer?

Unique Data Access & Data Flywheels

Companies that are able to leverage proprietary datasets to build unique value are where a substantial amount of value will accrue.

Think of medical companies feeding data flywheels of patient data to auto suggest what drug to prescribe to a patient given (i) their medical history and (ii) millions of similar patients. This type of data is incredibly difficult to continuously embed in ChatGPT. Further, one could fine-tune based on millions of sets of datapoints to compare and uniquely predict drug prescriptions and outcomes.

Imagine Facebook leveraging the social graph to optimize every ad anyone publishes and uniquely customizing each based on a persons likes, preferences and interests. Every ad would look unique and cater specifically to individual users based on their specific preferences. Facebook could then leverage the data on what works and what doesn’t for users to continuously fine-tune their auto generation of copy, images, video to suit them better.

What if Toast leveraged POS data from all the restaurants in the world to build the best prediction engine imaginable on inventory and food management? This is proprietary data that most would not have access to with the context of supply chains, order behavior across restaurants, event-specific patterns

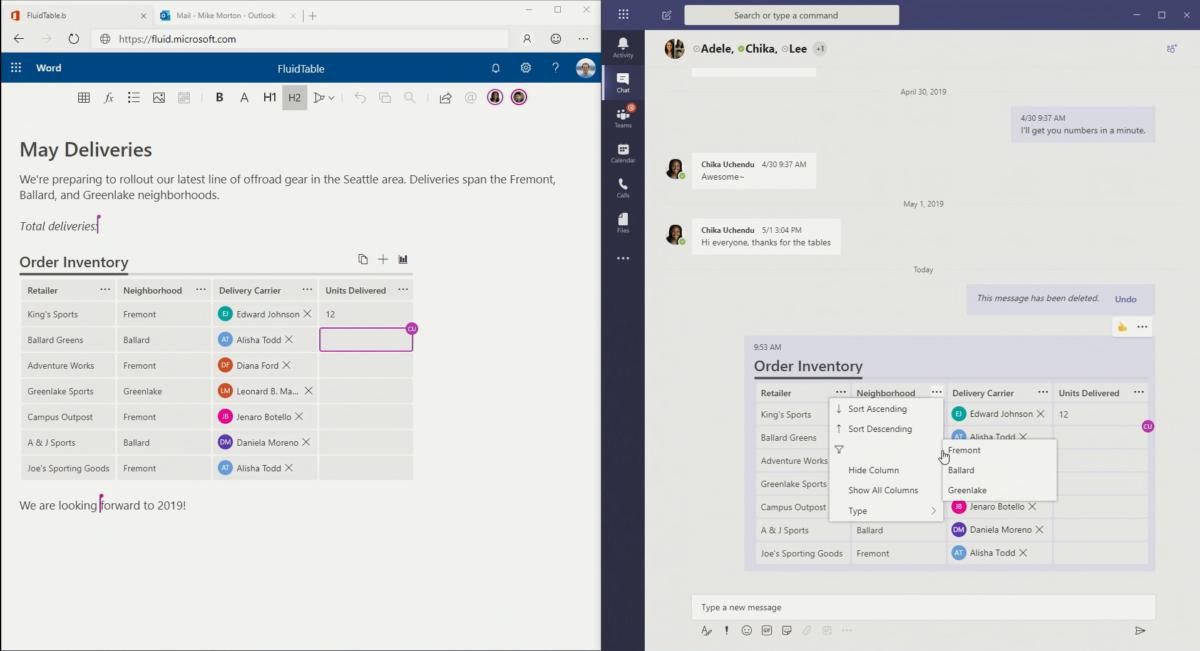

Microsoft is already leveraging the “knowledge graph” of users to augment users workflows with suggestions, document transformations, email drafting using the context of the documents/emails/presentations that they have on the users.

In each of these cases, as they continue to cultivate new data, the products get better. But what remains to be seen is which “proprietary” data flywheels hold value in the wake of the foundation models getting larger, and being extremely performant across more and more tasks.

Integration and constant ingestion of data

Large language models in its simplest form function via next-word-prediction . The context window or “prompt” is invaluable in helping the model understand what to output, which marked the genesis of “prompt engineering”. However, there’s only so much data one can embed in a prompt in a chat-like interface.

What if you wanted to input all your medical records and get a suggestion on what drug to take? ChatGPT likely wouldn’t have full access to something like this

What if you wanted to embed your personal finance data and get suggestions on what to invest, what buckets of expenses to spend on, how to think about saving for yours goals years down the line?

What if you wanted movie/tv/food/video recommendations based on your unique preferences, your history of consumption, the types of people you spend time with and the topics you spend the most time on?

What if you wanted it to assess your stock portfolio in context of who you are and what your goals are?

What if you wanted an answer to a question, not synthesized from a corpus of billions of broad pieces of information but specific from the trusted article/research paper/book that you were reading?

What if you were drafting a long document or presentation and needed help brainstorming a topic in context of what you were already writing and working on? One could imagine copying and pasting some version of this into a chat interface like ChatGPT but a neatly packaged UI/UX layer that injects this data seamlessly and dynamically as it updates is enormously valuable in the moment.

There are ways to hack this into ChatGPT but a neatly productized UI/UX layer that automatically pulls dynamic data on your consumption to give you actionable insights and suggestions could be enormously valuable. There would be data flywheels one could theoretically garner from these applications based on what works and what didn’t (e.g. with restaurant recommendations) to continuously fine-tune the model.

Capturing Entirety of Value Chains

Companies that have best-in-class products that tackle the entirety of user journeys and have pre-existing distribution are poised to succeed.

Take Facebook for example again — instead of needing to use one of the thousand text-image startups, Facebook could create a self serve platform for ads automating this process. Users could then go the platform to (i) generate the ad visuals (ii) generate the ad copy (iii) push it through the surface in which they want the ads to be viewed and (iv) have every ad be manipulated to fit the social graph of each individual.

Similarly, Amazon could build a chat interface into their product that enables you to ask anything and get something auto-ordered to your door. One would go to Amazon and type “I need to plan a Christmas party for 15 that’s gold themed”. It would then auto- source, buy, and deliver all the items to your home straight from that prompt.

As I alluded to above, Microsoft has already started to do this with Copilot in their 365 suite and the fluid framework.

Every document that anyone creates is modular and the information can sync across modalities — from Teams to a word document to a presentation to an email. One can auto-generate a transcript a teams call, and have it summarized in a word document. They can then ask copilot to transform that document into a beautifully crafted presentation. Thereafter, they can have that shared via Outlook or Teams and auto-generate the email blurb based on the context of the conversation. This is emblematic of owning the entire productivity journey for users.

This dovetails into the above 50% of value coming from large incumbents.

The surface area in which knowledge work already ensues already in large part has captured most of the user journeys in knowledge work. They have the unique ability to create orders of magnitude more value by embedding gen AI in the workflow of most users. However, it’ll be interesting to explore other user journeys that aren’t fully augmented by companies today and the AI-first angle that could be built (e.g. Harvey for legal).

Usage-Based Recommendations, Guidance, Playlists

One product-type that is much more difficult to disrupt by a chat interface like chatGPT is high consumption and engagement products that are fueled by recommendations.

Take Netflix — Netflix has an enormous context window of content that users have engaged with for years. Similarly, they have this data across (i) millions of users engagement patterns and behaviors (ii) their personal viewing history, ratings, what they binge versus don’t (iii) shows/movies/tv that you churn from. They can leverage this data over-time to even auto-generate videos, tv, movies that are curated for one’s preferences, likes and viewing behavior. There’s a level of value created here one could not get from simply inputting preferences into a chat window or third party app.

Take Music — chatGPT may be able to recommend you music based on listening history but a company like Spotify build (i) much deeper recommendations based on years and thousands of hours of your history, (ii) built it into a curated playlist in the context of an app that you use regularly, (iii) use data flywheels based on your actual engagement data with recommendations to continuously adapt your playlists, (iv) generate music content catered to you AND (iv) supplement it by the social graph of listening that a user has. Spotify has an enormous footprint of data on millions of users they can continuously fine-tune this on. This type of recurring usage-based recommendation is something a chatgpt or most other competitors would not have access to.

Traditional Growth Levers: In Product Growth Loops, Network Effects, Marketplace Dynamics, etc

In any hype-cycle it’s important to understand and rationalize the constants. The traditional characteristics that drove trillions of dollars of value creation across hundreds of businesses will continue to hold true.

Network effects driven through collaboration or deep social and referral features like on Google Docs, Miro, etc.

In-product growth loops that drive users to engage more deeply with the product at hand like the templates feature for Notion, Coda, Craft.

Marketplace flywheel dynamics where the value of every incremental supply unit creates incentive for added demand-units and vice versa like Uber, DoorDash, Craigslist

Deep integrations into workflow like Microsoft owning every part of the productivity journey and building out fluid components that seamlessly work across every app

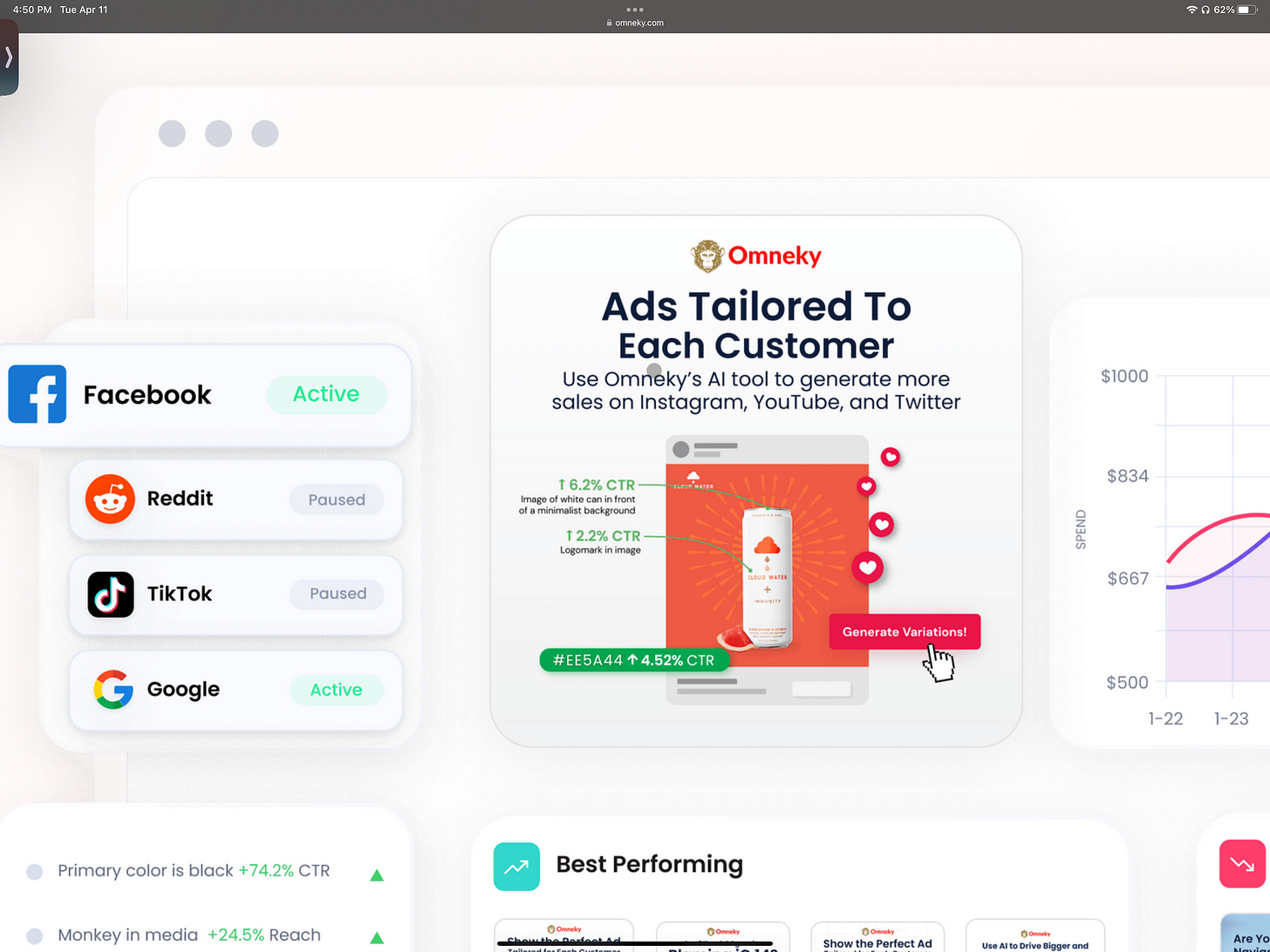

Quantifiable benefits versus outputs: Most AI companies today focus on the output of a specific instruction or prompt. While this is valuable, what most of these companies are missing is the actual quantifiable benefit that a user receives from. Imagine if Jasper could not just draft ad-copy but also tell you what the expected CTR of using one phrase versus another would be. Though I’m not aware of the efficacy, Omneky is emblematic of pushing the envelope on this kind of thinking. It shows where a user can expect a X% lift based on suggestions it uniquely makes and images it uniquely creates:

UI/UX Paradigms where Chat interfaces struggle — There are many instances where a prototypical chat interface struggles to drive value for users. Exemplary of this is the wordtune keyboard for live paraphrasing and generation in context of the message window, browser tab, Reddit forum that any user is in. This is a much simpler and efficacious way to inject text rather than having to go to a new app or window to use another interface and copy it over.

Ubiquity: Antithetically to building deep in one vertical and capturing every part of the journey, extension-like products aim to be more horizontal in nature. This is instrumentally valuable if you’re able to build, retain and leverage existing context across a multitude of different sources to uniquely provide value in any instance a user is in (email, calendar, mail, text, zoom, slack, etc). The ease of being able to capture inputs and information that exists in the user’s window is instrumentally valuable. This is something that a ChatGPT would struggle with getting. Further, the extension should be able to garner more inputs from users than a typical product that is verticalized for a specific journey. These products typically only have access to information from a specific flow — for example, MSFT versus a Language Tool extension would not have access to data from Notion, Slack, messages, and other sources.

Companies that build in this world need to stick to the fundamentals of what builds a predictable, sustainable business that has unique competitive moats which become difficult to encroach on.

Go To Market

There are a plethora of preconceived notions around companies being wrappers on a foundation model and thus facing extreme commoditization risk. While this may be true in most cases, it’s critical to assess the GTM capabilities of the org and how the company intends to scale.

In an era where product velocity & engineering throughput with the advent of these LLM’s become near instantaneous, anyone can ask ChatGPT to build them the next Stripe, WhatsApp, Dropbox, etc over time. Product differentiation becomes much more muted given anyone can prompt the engine to build them any kind of product with a specific UI/UX. In such a world, GTM becomes one of the strongest capabilities and functions of differentiation versus incumbents. Some examples below:

Companies with strong B2B prowess and funnels that can get companies sticky are likely to win. Think about a new AI SAAS company that was focused on retailers and had connections into the C-suite at Walmart, Target, Kohls, Macy’s. Think about an insurtech AI company that had connections into Geico, Allstate, nationwide. It would be tough to compete against the likes of such a company.

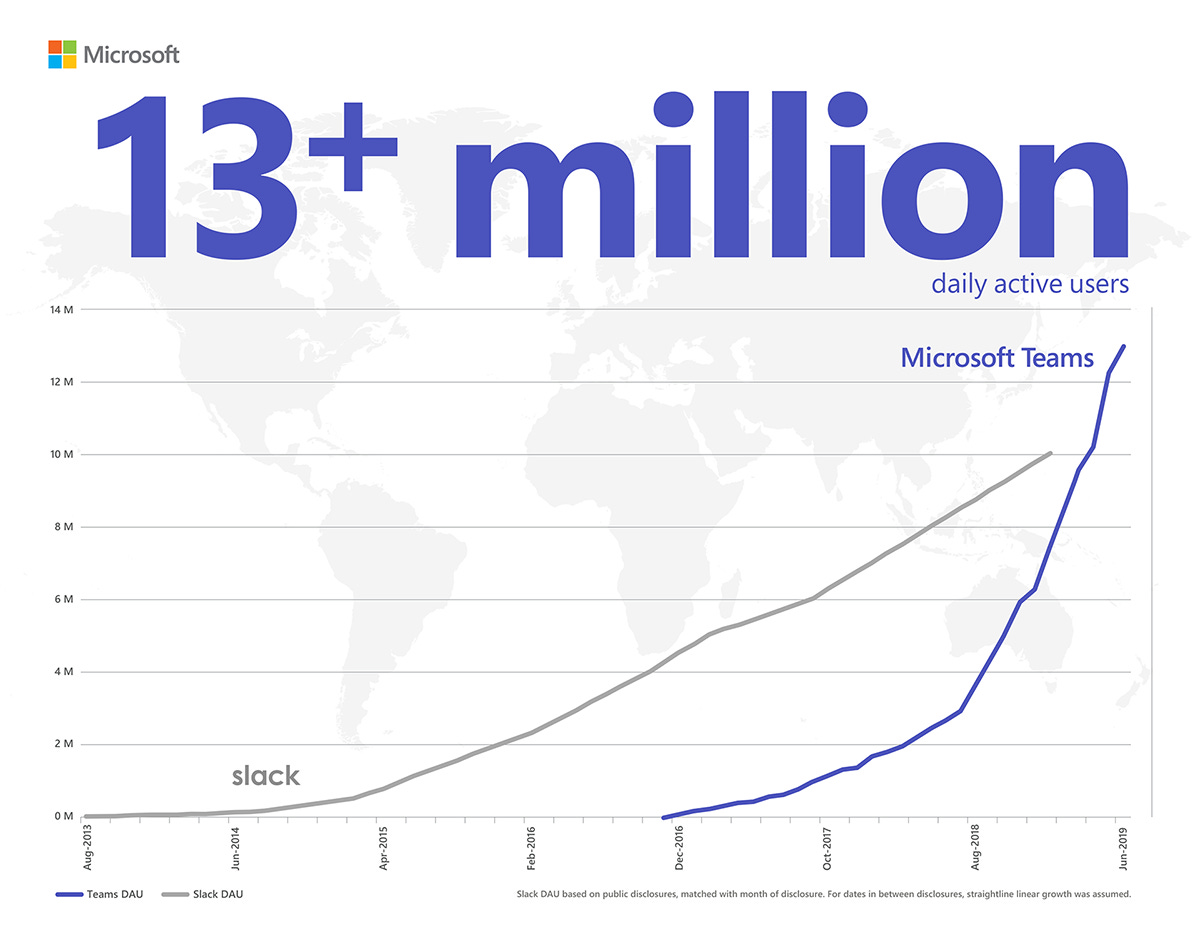

Companies that can bundle new product offerings into adjacent ones that are used by customers that already trust them to build unique experiences will have a strong head start. We’ve already seen the instrumental lock-in experienced by Microsoft with their bundles and how companies like Slack have fared in the wake of this. This will continue to be a lever the giants pull to build more lock-in and adoption to new products.

Teams that have extreme expertise in tapping into specific channels like influencer marketing have an enormous head start. Think of a team that’s connected with the most luminary influencers on TikTok and YouTube and can leverage their brand presence to bolster growth

All in all, it’ll be incredibly interesting to view the evolution of these foundation models and the ripple effects it has through society